Intro – Tools Don’t Create Culture, but They Multiply It

There’s a hard truth every data leader learns sooner or later: software never repairs a broken mindset. You can plaster your stack with every hot logo on the Modern Data Stack bingo card—Snowflake, Databricks, Fivetran, the lot—and still watch dashboards collapse if people keep thinking, “Data quality is somebody else’s job.”

Yet when a company embraces shift-left thinking—treating data as a product, pushing ownership upstream, and baking quality into the very first mile—the right platform suddenly behaves like a set of power tools. The chores that once took days of manual effort are finished in minutes; errors that once slipped into production are stopped at the point of data collection. Below is a walkthrough of those power tools and the capabilities they unlock.

1. Transformation-as-Code

Turn business logic into version-controlled, testable modules

For years, business logic hid in fragile SQL files on personal laptops; one wrong copy-and-paste and the company’s definition of “active user” changed overnight. But hey, that’s what dbt comes to solve. By letting analysts write models as version-controlled SQL—and by running unit tests and generating documentation every time those models are built—dbt turns data transformation into software engineering. A pull request that fails a test never reaches production, so a logic error is caught while its author is still in the branch instead of two weeks later during an executive review.

But it’s not just dbt. If you live inside Google Cloud, you might reach for Dataform, which offers a similar Git-centric workflow tied directly to BigQuery. Databricks users lean on Delta Live Tables, where SQL or Python pipelines come with declared data-quality “expectations.” Snowflake users can do something similar through Snowpark, and AWS shops often pair Glue Studio with the Deequ quality library. The tooling differs, but the principle is identical: treat transformations like code, and broken logic stops feeling inevitable.

2. Data Observability

Smoke detectors” that alert you before execs ping you in Slack

Imagine a smoke detector that starts beeping ten minutes before your kitchen catches fire. That is the promise of modern data-observability platforms. Monte Carlo wires itself into warehouses like Snowflake or BigQuery and monitors five vital signs—freshness, volume, schema changes, null blow-ups, and statistical anomalies. The moment something drifts, the system traces every downstream dashboard or model likely to misbehave and pings the on-call channel in Slack or PagerDuty. The engineering team fixes the issue before Monday’s metrics meeting, not after.

Monte Carlo is not alone. Bigeye gives teams a drag-and-drop rule builder and ML-powered anomaly detection, Datafold highlights row-level diffs inside pull requests, and Anomalo runs fully automated statistical tests without code. If you prefer to operate solely inside the cloud console, Google’s Dataplex Quality, Azure Purview Profiler, or AWS CloudWatch paired with Deequ offer lighter-weight, native options. Regardless of vendor, observability compresses mean-time-to-detect from days to minutes, which is the oxygen data reliability needs.

3. Testing & Validation

Unit Test for Rows and Columns

Observability tells you when something is off; validation frameworks prove a dataset was right in the first place. Great Expectations lets teams describe rules—“this column can’t be null,” “ninety-five percent of values must fall inside this set”—in human-readable YAML or Python. The moment a file lands or a table builds, the rules run. Failures quarantine the offending rows or halt the pipeline entirely, turning “we hope the data’s clean” into a guarantee.

The same philosophy powers SodaCL, whose YAML syntax slots neatly into dbt jobs, and AWS Deequ, which embeds expectations inside Spark. Databricks engineers often declare quality checks directly in Delta Live Tables, while Google Cloud users write SQL assertions inside Dataform. However you frame the contract, the result is identical: corrupted data is rejected at the warehouse door, not discovered months later when a board deck looks suspicious.

4. Metadata & Lineage

Google Maps for your datasets and metrics.

When a metric goes wrong, the first question is always “where did this column come from?” DataHub, open-sourced by LinkedIn, answers that question by collecting metadata from warehouses, BI tools, and orchestration jobs, then drawing an end-to-end lineage graph. One glance shows the upstream source file, every intermediate model, and each dashboard that consumes the data. Owners are listed by name, documentation lives one click away, and any schema change triggers an automatic heads-up in Slack.

Enterprises sometimes choose Collibra for its heavyweight governance workflows or Atlan for its polished UI and Slack “data assistant.” Databricks customers gain fine-grained catalog governance through Unity Catalog, while lighter-weight open-source shops deploy Amundsen. There are also great options by the big cloud providers —Azure Purview, Google Data Catalog, and the AWS Glue Catalog all offer discovery and policy engines. Whichever path you pick, a shared map of data assets is a great step towards building a reliable platform.

5. Workflow Orchestration

The scheduler glue that keeps jobs running in the right order—every time.

All these layers need a conductor. Dagster frames data pipelines as Python graphs and treats every table or file as a first-class asset. If an upstream table is stale, Dagster refuses to build anything that depends on it; if a test fails, the job aborts and the UI highlights the failed node in red. The result is a pipeline that enforces quality gates automatically rather than trusting engineers to remember cron syntax.

Yes, Apache Airflow still remains popular for most teams with its vast library of operators that covers everything from Hive to Kubernetes. Others prefer the Pythonic ergonomics of Prefect 2.0, the managed convenience of Google Cloud Composer, or low-code visual builders like Azure Data Factory and AWS Step Functions. Regardless of preference, orchestration ensures tasks run in the right order, retries fire when APIs hiccup, and SLAs are defined in code instead of on sticky notes.

Advice Corner — How to Choose and Where to Start

If you’re a solo data pro eyeing these tools, begin with an honest self-audit. Rate yourself on SQL fluency, Git comfort, testing know-how, pipeline skills, and cloud familiarity. Pick one anchor capability—dbt if you love SQL, Airflow or Dagster if you enjoy orchestration, Great Expectations if data quality fascinates you—and build a free-tier side project. Contribute a pull request, share the link on LinkedIn, and translate what you learn into a quick win at work (automate one flaky query, add one data test). A single open-source contribution often carries more weight with recruiters than a page of résumé bullets.

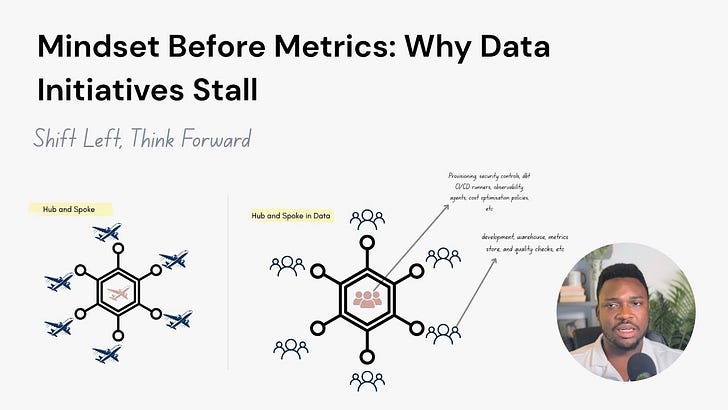

For companies, the roadmap scales with headcount. A seed-stage start-up can do wonders with BigQuery or Snowflake, dbt Cloud’s free tier, Great Expectations, and Monte Carlo Lite, all stewarded by one full-stack data engineer and a product-minded analyst. Mid-market firms thrive on a hub-and-spoke model: a central platform team maintains standards while embedded analytics engineers serve each domain. Enterprises layer Collibra-level governance, multi-region observability, and asset-aware orchestration on top of that foundation, tying data SLAs directly to OKRs.

Across sizes, the guidance is consistent: start small, automate repetitive pain points, surface wins early, and fund continuous upskilling. The goal isn’t a trophy stack—it’s shorter time-to-trust.

Closing Thoughts

The distance between bad data and broken business decisions used to be measured in months. With the five capabilities above—transformation-as-code, observability, validation, lineage, and orchestration—it shrinks to minutes. Layer these tools atop a culture that owns quality from the first byte, and your dashboards stop whispering surprises. They start telling the truth—on time, every time.

TL;DR

Shift-left only works when culture leads and tools amplify.

Five critical capabilities—transformation-as-code, observability, validation, lineage, orchestration—push quality checks upstream and slash time-to-detect from weeks to minutes.

dbt, Monte Carlo, Great Expectations, DataHub, and Dagster are flagship examples, with cloud and OSS cousins that cover the same ground.

Individuals should master one anchor tool, ship a side project, and translate quick wins back to their day job.

Start-ups can thrive with a lean, free-tier stack; mid-markets win with hub-and-spoke; enterprises layer governance and SLAs on top.

Bottom line: modern data teams engineer trust by design—not by heroic cleanup.

Notes

Transformation-as-Code

dbt → https://docs.getdbt.com/

Dataform (Google Cloud) → https://cloud.google.com/dataform/docs

Delta Live Tables (Databricks) → https://docs.databricks.com/workflows/delta-live-tables/

Snowflake Snowpark / Native Apps →

https://docs.snowflake.com/

AWS Glue Studio → https://docs.aws.amazon.com/glue/latest/ug/what-is-glue.html

Amazon Deequ → https://github.com/awslabs/deequ

Azure Synapse Mapping Data Flows → https://learn.microsoft.com/azure/synapse-analytics/data-integration/data-flow-overview

Data Observability

Monte Carlo → https://www.montecarlodata.com/

Bigeye → https://www.bigeye.com/

Datafold → https://datafold.com/

Anomalo → https://www.anomalo.com/

Databand (IBM) → https://www.ibm.com/products/databand

GCP Dataplex Data Quality → https://cloud.google.com/dataplex?hl=en

Azure Purview Profiler → https://learn.microsoft.com/azure/purview/

AWS CloudWatch + Deequ → https://docs.aws.amazon.com/cloudwatch/

Testing & Validation

Great Expectations → https://greatexpectations.io/docs/

Soda (SodaCL / Soda Cloud) → https://docs.soda.io/

AWS Deequ → https://github.com/awslabs/deequ

Delta Live Tables Expectations → https://docs.databricks.com/workflows/delta-live-tables/

Dataform Assertions → https://cloud.google.com/dataform?hl=en

Metadata & Lineage

DataHub → https://datahubproject.io/

Atlan → https://atlan.com/

Collibra → https://www.collibra.com/

Amundsen (OSS) → https://www.amundsen.io/

Unity Catalog (Databricks) → https://docs.databricks.com/data-governance/unity-catalog/index.html

Azure Purview → https://learn.microsoft.com/azure/purview/

Google Data Catalog → https://cloud.google.com/data-catalog

AWS Glue Data Catalog → ttps://docs.aws.amazon.com/glue/latest/dg/components-overview.html#data-catalog

Workflow Orchestration

Dagster → https://docs.dagster.io/

Apache Airflow → https://airflow.apache.org/docs/

Prefect 2.0 → https://docs.prefect.io/

Google Cloud Composer → https://cloud.google.com/composer/docs

AWS Step Functions → https://aws.amazon.com/step-functions/

Azure Data Factory → https://learn.microsoft.com/azure/data-factory/